A container packages an application’s code, binaries, and dependencies together. The container includes everything the application needs. It runs on any compatible hardware, whether a cloud server, dedicated server, a hybrid virtual server, or a developer’s laptop. Containers simplify the development and deployment of applications.

In recent years, containers have become increasingly popular. Enterprise organizations use container hosting more often than virtual machines, and companies like Google run their entire infrastructure on tens of thousands of containers.

But how do containers work? What software do they rely on? And which tools should your organization consider for its containerized infrastructure?

What Is a Container?

Containers are named for shipping containers. Shipping containers are big boxes in standard sizes. All of the equipment used in shipping — the ships, cranes, forklifts, and trucks — is designed around the standard dimensions of a shipping container. Every port in the world has the equipment to handle shipping containers. Standardization makes shipping faster, cheaper, and more efficient.

In computing, containers fulfill much the same purpose. Containers are “boxes” that hold software. The containers are standardized and isolated from the rest of the system. They contain an application’s code and binaries, utility software, and any external libraries the software depends on.

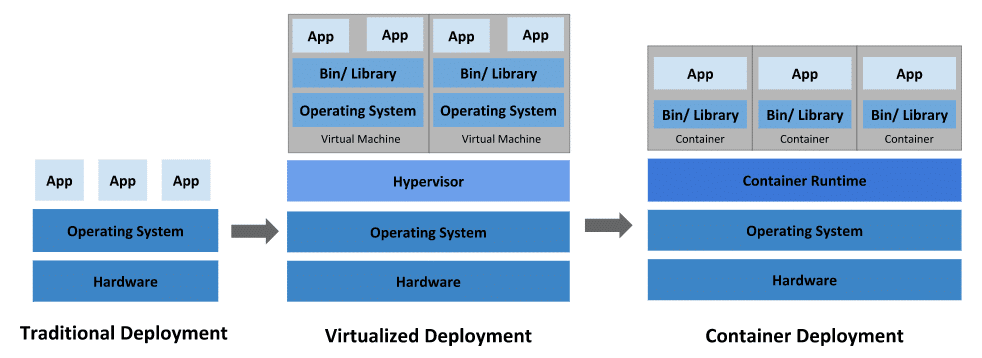

Unlike a virtual machine, containers do not include an operating system or the superfluous software that comes along with an OS. That makes containers smaller and faster than virtual machines. They consume less storage space and memory. Containers start much faster than VMs—in a couple of seconds rather than tens of seconds or minutes.

Looking Beneath the Container Abstraction

In the previous section, we gave a high-level explanation of what a container is. You will often find containers described as “similar to virtual machines but faster and less resource intensive.” Like all high-level abstractions, that’s only accurate up to a point.

In fact, containers are nothing like virtual machines.

A container is simply a bundle of running processes that take advantage of features of the Linux kernel for isolation and resource allocation. Those features are cgroups and namespaces.

Cgroups—or control groups—limit and isolate the resource use of a group of processes. They allow processes to be “bundled together” and for each bundle to be given constraints on network, CPU, memory, and other system resources.

Namespaces limit which parts of the system a process—or process group—can access. For example, every process is in a process namespace. Usually, the operating system is one large tree of processes, but a subtree can be put in its own namespace. Processes in the namespace cannot see or access any processes outside of the namespace. There are also namespaces for other parts of the system, such as the filesystem and network.

Consider a process that can start subprocesses but that is isolated from the rest of the system, that only has access to its own root directory, which contains all the libraries and other software it needs to run, and that has limited access to the server’s resources — that’s what cgroups and namespaces provide and that’s what a container is.

Docker and other containers systems add infrastructure and tooling around these kernel features.

The Components of Docker Containerization

We’ve looked at what containers are, but what about the software used to run and manage containers?

Container image — a container image is a file that contains the components needed to run an application as a container, including the code and libraries. In effect, it is a snapshot of the container. Multiple instances of a container can be run based on each container image.

Container engine — the container engine or runtime is the software that runs containers. It, or associated tools, receives requests from the user and creates running container instances. The most widely used container engine is Docker, but there are several others, including LXD and RKT.

Registry server — a registry server is a file server that hosts container repositories, which for practical purposes can be thought of as container images. The container engine can pull container images from a registry server before running them.

Build configuration file — build configuration files—such as the Dockerfiles used with Docker—contain commands for building and configuring an image. The commands might pull images from a registry, install software, set up networking, and execute commands within the container.

Let’s say you wanted to run WordPress in a container on your server. To do so, you would run commands such as the following:

docker pull wordpress:latestdocker run wordpress

The first command pulls the most recent Docker image from the registry server. The second command runs the WordPress image as a container instance.

The above example is simplified. It lacks network and other configuration details. But most importantly, it lacks the database WordPress requires. Containers allow developers and DevOps professionals to split applications into multiple different containers. To run WordPress, you would run a MySQL container and a WordPress container.

An application may use many different containers. The application itself might be divided into microservices. Containers may be duplicated for redundancy or performance. It gets complicated quickly, and it’s not uncommon to run hundreds or thousands of containers. Organizations that need to run and manage many containers use orchestration software.

Orchestration Tools for Working With Docker

There are several container formats, and each has associated orchestration software. But we’re going to focus on two of the most popular Docker orchestration tools: Docker Swarm and Kubernetes.

What Is Container Orchestration Software?

Container orchestration controls and automates many of the functions needed to use large container deployments. It handles provisioning and deployment, scaling, redundancy, resource allocation, load balancing, service discovery, and more.

It would be impossible to manually manage a complex web application with a hundred different containers. Instead, DevOps professionals use a configuration file to describe the deployment, the connections between containers, the container images to use, and so on.

The orchestration software then automatically deploys and manages clusters of containers, often across many different servers. When you want to deploy more containers, the orchestration software looks at the resources available on your servers and other constraints before deploying the container to the appropriate host.

Choosing Orchestration Software for Your Company?

Kubernetes was created by Google before being open-sourced. It is an enormously powerful orchestration platform, and it is widely used and trusted by enterprise organizations.

Kubernetes handles all aspects of container deployment and management, such as automatic scaling, automatic restarting of containers, replication, and load distribution. Kubernetes divides container deployments into clusters. Each cluster is managed by a cluster master container that controls many cluster nodes—the worker containers.

Kubernetes is well-suited to enterprise-scale deployments, but it is also complex. For smaller deployments, Kubernetes may be overkill.

Docker Swarm, which is created by the company behind the Docker container system, fulfills many of the same functions as Kubernetes. However, Swarm is simpler to use and lacks some of the extensibility and multi-environment capabilities of Kubernetes.

Swarm transforms multiple hosts into one virtual host— a pool of resources onto which containers can be launched. Swarm is conceptually similar to Kubernetes. A swarm is similar to a Kubernetes cluster. A manager node is similar to a cluster master.

In Summary

Which orchestration solution is right for your business? Kubernetes and Docker Swarm are excellent tools that can be used at scale. Kubernetes is best suited to large-scale container deployments. Consider Swarm if you are experimenting with containers and Docker, want to avoid the configuration overhead of Kubernetes, or intend to deploy containers in a simple multi-server environment.

VPS House Technology Group’s Dedicated Server and Hybrid Server platforms offer a secure, low-cost, and customizable hosting platform for your application containers. Book a free chat today to discuss your container hosting needs with the team of server experts at VPS House Technology Group.